English version below...

Dans le cadre de mon projet de fin d'études, j'ai travaillé sur l'intégration de LLVM et Clang dans Buildroot. Je vous en présente un résumé dans cet article. Pour sa lecture, la connaissance des principaux aspects de Buildroot, tels que la cross-compilation et la création de paquets est requise. L'idée de rédiger cet article en anglais est de pouvoir le partager avec toute la communauté Buildroot. Si vous souhaitez aller plus loin, un lien vers mon rapport de stage est présent à la fin de l'article.

In this article I'll be discussing my internship project, which is the integration of LLVM and Clang into Buildroot. LLVM as a compiler infrastructure can play both roles in Buildroot: on the one hand, it can be seen as a target package which provides functionalities such as code optimization and just-in-time compilation to other packages, whereas on the other hand it opens the possibility of creating a cross-compilation toolchain that could be an alternative to Buildroot’s default one, which is based on GNU tools.

This article is mainly focused on LLVM as a target package. Nevertheless, it also discusses some relevant aspects which need to be considered when building an LLVM/Clang-based cross-compilation toolchain.

The article is organized in a way that the technologies involved in the project are first introduced in order to provide the reader with the necessary information to understand the main objectives of it and interpret how software components interact with each other.

LLVM

LLVM is an open source project that provides a set of low level toolchain components (assemblers, compilers, debuggers, etc.) which are designed to be compatible with existing tools typically used on Unix systems. While LLVM provides some unique capabilities and is known for some of its tools, such as Clang (C/C++/Objective-C/OpenCL C compiler frontend), the main thing that distinguishes LLVM from other compilers is its internal architecture.

This project is different from most traditional compiler projects (such as GCC) because it is not just a collection of individual programs, but rather a collection of libraries that can be used to build compilers, optimizers, JIT code generators and other compiler-related programs. LLVM is an umbrella project, which means that it has several subprojects, such as LLVM Core (main libraries), Clang, lldb, compiler-rt, libclc, and lld among others.

Nowadays, LLVM is being used as a base platform to enable the implementation of statically and runtime compiled programming languages, such as C/C++, Java, Kotlin, Rust and Swift. However, LLVM is not only being used as a traditional toolchain but is also popular in graphics, such is the case of:

• llvmpipe (software rasterizer)

• CUDA (NVIDIA Compiler SDK based on LLVM)

• AMDGPU open source drivers

• Most of OpenCL implementations are based on Clang/LLVM

Internal aspects

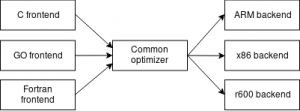

LLVM's compilation strategy follows a three-phase approach where the main components are: the frontend, the optimizer and the backend. Each phase is responsible for translating the input program into a different representation, making it closer to the target language.

Figure 1: Three-phase approach

Frontend

The frontend is the component in charge of validating the input source code, checking and diagnosing errors, and translating it in from its original language (eg. C/C++) to an intermediate representation (LLVM IR in this case) by doing lexical, syntactical and semantic analysis. Apart from doing the translation, the frontend can also perform optimizations that are language-specific.

LLVM IR

The LLVM IR is a complete virtual instruction set used throughout all phases of the compilation strategy, and has the main following characteristics:

• Mostly architecture-independent instruction set (RISC)

• Strongly typed

– Single value types (eg. i8, i32, double)

– Pointer types (eg. *i8, *i32)

– Array types, structure types, function types, etc.

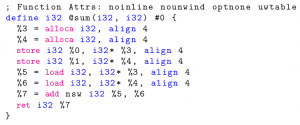

• Unlimited number of virtual registers in Static Single Assignment (SSA)

Intermediate Representation is the core of LLVM. It is fearly readable, as it was designed in a way that is easy for the frontends to generate but expressive enough to allow effective optimizations that produce fast code for real targets. This intermediate representation exists in three forms: a textual human-readable assembly format (.ll), an in-memory data structure and an on-disk binary ”bitcode format” (.bc). LLVM provides tools to convert from from textual format to bitcode (llvm-as) and viceversa (llvm-dis). Below is an example of how LLVM IR looks like:

Figure 2: LLVM Intermediate Representation

Optimizer

In general, the two main objectives of the optimization phase are improving the execution time of the program and reducing its code size. The strategy proposed by LLVM is designed to achieve high performance executables through a system of continuous optimization. Because all of the LLVM optimizations are modular (called passes), it is possible to use all of them or only a subset. There are Analysis Passes and Transformation Passes. The first ones compute some information about some IR unit (modules, functions, blocks, instructions) without mutating it and produce a result which can be queried by other passes. On the other hand, a Transformation Pass transforms a unit of IR in some way, leading to a more efficient code (also in IR). Every LLVM pass has a specific objective, such dead code elimination, constant propagation, combination of redundant instructions, dead argument elimination, and many others.

Backend

This component, also known as code generator, is responsible for translating a program in LLVM IR into optimized target-specific assembly. The main tasks carried out by the backend are register allocation, instruction selection and instruction scheduling. Instruction selection is the process of translating LLVM IR operations into instructions available on the target architecture, taking advantage of specific hardware features that can lead to more efficient code. Register allocation involves mapping variables stored in the IR virtual registers onto real registers available in the target architecture, taking into consideration the calling convention defined in the ABI. Once these tasks and others such as memory allocation and instruction ordering are performed, the backend is ready to emit the corresponding assembly code, generating either a text file or an ELF object file as output.

Retargetability

The main advantage of the three-phase model adopted by LLVM is the possibility of reusing components, as the optimizer always works with LLVM IR. This eases the task of supporting new languages, as new frontends which generate LLVM IR can be developed while reusing the optimizer and backend. On the other hand, it is possible to bring support for more target architectures by writing a backend and reusing the frontend and the optimizer.

Clang

Clang is an open source compiler frontend for C/C++, Objective-C and OpenCL C for LLVM, therefore it can use LLVM’s optimizer to produce efficient code. Since the start of its development in 2005, Clang has been focused on providing expressive diagnostics and an easy IDE integration. As LLVM, it is written in C++ and has a library-based architecture, which allows, for example, IDEs to use its parser to help developers with autocompletion and refactoring. Clang was designed to offer GCC compatibility, so it accepts most GCC’s command line arguments to specify the compiler options. However, GCC offers a lot of extensions to the standard language while Clang’s purpose is being standard-compliant. Because of this, Clang cannot be a replacement for GCC when compiling projects that depend on GCC extensions, as it happens with Linux kernel. In this case, Linux does not build because Clang does not accept the following kinds of constructs:

• Variable length arrays inside structures

• Nested Functions

• Explicit register variables

Furthermore, Linux kernel still depends on GNU assembler and linker.

An interesting feature of Clang is that, as opposed to GCC, it can compile for multiple targets from the same binary, that is, it is a cross-compiler itself. To control the target the code will be generated for, it is necessary to specify the target triple in the command line by using the --target =< triple > option. For example, --target=armv7-linux-gnueabihf corresponds to the following system:

• Architecture: arm

• Sub-architecture: v7

• Vendor:unknown

• OS: linux

• Environment: GNU

Linux graphics stack

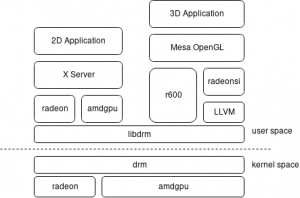

This section intends to give an introduction to the Linux graphics stack in order to explain the role of LLVM inside this complex system comprised of many open source components that interact with each other. Fig. 3 shows all the components involved when 2D and 3D applications require rendering services from an AMD GPU using X:

Figure 3: Typical Linux open source graphics stack for AMD GPUs

X Window System

X is a software system that provides 2D rendering services to allow applications creating graphical user interfaces. It is based on a client-server architecture and exposes its services such as managing windows, displays and input devices through two shared libraries called Xlib and XCB. Given that X uses network client-server technology, it is not efficient when handling 3D applications due to its latency. Because of this, there exists a software system called Direct Rendering Infrastructure (DRI) which provides a faster path between applications and graphics hardware.

The DRI/DRM infrastructure

The Direct Rendering Infrastructure is a subsystem that allows applications using X Server to communicate with the graphics hardware directly. The most important component of DRI is the Direct Rendering Manager, which is a kernel module that provides multiple services:

• Initialization of GPU such as uploading firmwares or setting up DMA areas.

• Kernel Mode Setting(KMS): setting display resolution, colour depth and refresh rate.

• Multiplexing access to rendering hardware among multiple user-space applications.

• Video memory management and security.

DRM exposes all its services to user-space applications through libdrm. As most of these services are device-specific, there are different DRM drivers for each GPU, such as libDRM-intel, libDRM-radeon, libDRM-amdgpu, libDRM-nouveau, etc. This library is intended to be used by X Server Display Drivers (such as xserver-xorg-video-radeon, xserver-xorg-video-nvidia, etc.) and Mesa 3D, which provides an open source implementation of the OpenGL specification.

Mesa 3D

OpenGL is a specification that describes an API for rendering 2D and 3D graphics by exploiting the capabilities of the underlying hardware. Mesa 3D is a collection of open source user-space graphics drivers that implement a translation layer between OpenGL and the kernel-space graphics drivers and exposes the OpenGL API as libGL.so. Mesa takes advantage of the DRI/DRM infrastructure to access the hardware directly and output its graphics to a window allocated by the X server, which is done by GLX, an extension that binds OpenGL to the X Window System.

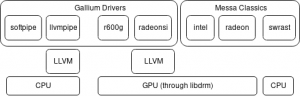

Mesa provides multiple drivers for AMD, Nvidia and Intel GPUs and also provides some software implementations of 3D rendering, useful for platforms that do not have a dedicated GPU. Mesa drivers are divided in two groups: Messa Classics and Gallium 3D. The second group is a set of utilities and common code that is shared by multiple drivers, such as nouveau (Nvidia), RadeonSI (AMD GCN) and softpipe (CPU).

As shown in Fig.4, LLVM is used by llvmpipe and RadeonSI, but it can optionally be used by r600g if OpenCL support is needed. The llvmpipe is a multithreaded software rasterizer uses LLVM to do JIT compilation of GLSL shaders. Shaders, point/line/triangle rasterization and vertex processing are implemented in LLVM IR, which is then translated to machine code. Another much more optimized software rasterizer is OpenSWR, which is developed by Intel and targets x86_64 processors with AVX or AVX2 capabilities. Both llvmpipe and OpenSWR present a much faster alternative to the classic Mesa’s single-threaded softpipe software rasterizer.

Figure 4: Mesa 3D drivers

LLVM/Clang for Buildroot

The main objective of this internship was creating LLVM and Clang packages for Buildroot. These packages activate new functionalities such as Mesa 3D’s llvmpipe software rasterizer (useful for systems which do not have a dedicated GPU), RadeonSI (Gallium 3D driver for AMD GCN) and also provide the necessary components to allow the integration of OpenCL implementations. Once LLVM is present on the system, new packages that rely on this infrastructure can be added.

Buildroot Developers Meeting

After some research concerning the state of the art of the LLVM project, the objectives of the internship were presented and discussed at the Buildroot Developers Meeting in Brussels, obtaining the following conclusions:

• LLVM itself is very useful for other packages (Mesa 3D’s llvmpipe , OpenJDK’s jit compiler, etc.).

• It is questionable whether there is a need for Clang in Buildroot, as GCC is still needed and it has mostly caught up with Clang regarding performance, diagnostics and static analysis. It would be possible to build a complete userspace but some packages may break.

• It could be useful to have a host-clang package that is user selectable.

• The long-term goal is to have a complete clang-based toolchain.

LLVM package

LLVM comes as a set of libraries with many purposes, such as working with LLVM IR, doing Analysis or Transformation passes, code generation ,etc. The build system allows to gather all these components and generate a shared library called libLLVM.so, which is the only necessary file that should be installed on the target system to provide support to other packages.

Some considerations

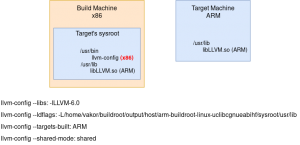

In order to cross-compile LLVM for the target, llvm-config and llvm-tblgen tools must first be compiled for the host. At the start of the project, a minimal version of host-llvm containing only these two tools was built by setting HOST_LLVM_MAKE_OPTS = llvm-tblgen llvm-config

The most important options to set are the following ones:

• Path to host’s llvm-tblgen: -DLLVM_TABLEGEN

• Default target triple: -DLLVM_DEFAULT_TARGET_TRIPLE

• Host triple (native code generation for the target): -DLLVM_HOST_TRIPLE

• Target architecture: -DLLVM_TARGET_ARCH

• Targets to build (only necessary backends): -DLLVM_TARGETS_TO_BUILD

llvm-config

llvm-config is a program that prints compiler flags, linker flags and other configuration-related information used by packages that need to link against LLVM libraries. In general, configure programs are scripts but llvm-config is a binary. Because of this, llvm-config compiled for the host needs to be placed in STAGING_DIR as llvm-config compiled for the target cannot run on the host:

Figure 5: llvm-config

To get the correct output from llvm-config when configuring target packages which link against libLLVM.so, host-llvm must be built using the same options (except that llvm tools are not built for the target) and host-llvm tools must be linked against libLLVM.so (building only llvm-tblgen and llvm-config is not sufficient). For example, Mesa 3D will check for the AMDGPU backend when built with LLVM support and selecting Gallium R600 or RadeonSI drivers:

llvm_add_target() {

new_llvm_target=$1

driver_name=$2

if $LLVM_CONFIG --targets-built | grep -iqw $new_llvm_target ; then

llvm_add_component $new_llvm_target $driver_name

else

AC_MSG_ERROR([LLVM target '$new_llvm_target' not enabled in your LLVM build. Required by $driver_name.])

fi

}If AMDGPU backend is not built for the host, llvm-config --targets-built will make the build fail. Another important thing is to set LLVM_LINK_LLVM_DYLIB, because if this option is not enabled, llvm-config --shared-mode will output "static" instead of "shared", leading to statically linking libLLVM.

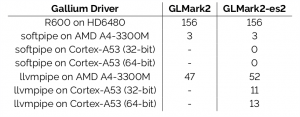

Some benchmarks

It was decided to run GLMark2 and GLMark2-es2 benchmarks (available in Buildroot) to test OpenGL 2.0 and OpenGL ES 2.0 rendering performance respectively on different architectures. The available hardware allowed to test x86_64, ARM, AArch64 and AMDGPU LLVM backends and verify the better performance of llvmpipe with respect to softpipe:

- Platform 1 - x86_64 (HP ProBook)

- Processor: AMD A4-3300M Dual Core (SSE3) @ 1.9 GHz

- GPU: AMD Radeon Dual Graphics (HD6480G + HD7450)

- Platform 2 - ARM (Raspberry Pi 2 Model B)

- Processor: ARMv7 Cortex-A7 Quad Core @ 900 MHz

- GPU: Broadcom Videocore IV

- Platform 3 - ARM/AArch64 (Raspberry Pi 3 Model B)

- Processor: ARMv8 Cortex-A53 Quad Core @ 1.2 GHz

- GPU: Broadcom Videocore IV

Table 1: GLMark2 and GLMark2-es2 results

OpenCL

Once LLVM was tested working on the more common architectures , the next goal was activating OpenCL support. This task involved multiple steps, as there are some dependencies which need to be satisfied.

OpenCL is an API enabling general purpose computing on GPUs (GPGPU) and other devices (CPUs, DSPs, FPGAs, ASICs, etc.), being well suited for certain kinds of parallel computations, such as hash cracking (SHA, MD5, etc.), image processing and simulations. OpenCL presents itself as a library with a simple interface:

• Standarized API headers for C and C++

• The OpenCL library (libOpenCL.so), which is a collection of types and functions which all conforming implementations must provide.

The standard is made to provide many OpenCL platforms on one system, where each pltform can see various devices. Each device has certain compute characteristics (number of compute units, optimal vector size, memory limits, etc). The OpenCL standard allows to load OpenCL kernels which are pieces of C99-like code that is JIT-compiled byt he OpenCL implementations (most of them rely on LLVM to work), and execute these kernels on the target hardware. Functions are provided to compile the kernels, load them, transfer data back and forth from the target devices, etc.

There are multiple open source OpenCL implementations for Linux:

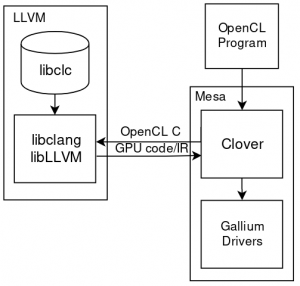

Clover (Computing Language over Gallium)

It is a hardware independent OpenCL API implementation that works with Gallium Drivers (hardware dependent userspace GPU drivers) which was merged into Mesa3D in 2012. It currently supports OpenCL 1.1 and it is close to 1.2. It has the following dependencies:

- libclang: provides an OpenCL C compiler frontend and generates LLVM IR.

- libLLVM: LLVM IR optimization passes and hardware dependent code generation

- libclc: implementation of the OpenCL C standard library in LLVM IR bitcode providing device builtin functions. It is linked at runtime.

It curently works with Gallium R600 and RadeonSI.

Pocl

This implementation is OpenCL 1.2 standard compliant and supports some 2.0 features. The major goal of this project is to improve performance portability of OpenCL programs, reducing the need for target-dependent manual optimizations. Pocl currently supports many CPUs (x86, ARM, MIPS, PowerPC), NVIDIA GPUs via CUDA (experimental), HSA-supported GPUs and multiple private off-tree targets. It also works with libclang and libLLVM but it has its own Pocl Builtin Lib (instead of using libclc).

Beignet

It targets Intel GPUs (HD and Iris) starting with Ivy Bridge, and offers OpenCL 2.0 support for Skylake, Kaby Lake and Apollo Lake.

ROCm

This implementation by AMD targets ROCm (Radeon Open Compute) compatible hardware (HPC/Hyperscale), providing OpenCL 1.2 API with OpenCL C 2.0. It has become open source in May 2017.

Because of this fragmentation concerning OpenCL implementations (without taking into account the propietary ones) there exists a program that allows multiple implemen-tations to co-exist on the same sytem: OpenCL ICD (Installable Client Driver). It needs the following components to work:

• libOpenCL.so (ICD loader): this library dispatches the OpenCL calls to OpenCL implementations.

• /etc/OpenCL/vendors/*.icd: these files tell the ICD loader which OpenCL implementations (ICDs) are installed on the sytem. Each file has a single line containing the name of the shared library with the implementation.

• One or more OpenCL implementations (the ICDs): the shared libraries pointed by the .icd files.

Clover integration

Considering that the available system for tests has an AMD Radeon Dual Graphics GPU (integrated HD6480G + dedicated HD7450M) and that Mesa 3D is already present in Buildroot, it was decided to work with the OpenCL implementation provided by Clover. The diagram in Fig.6 shows which are the necessary components to set up the desired OpenCL environment and how they interact with each other.

Figure 6: Clover OpenCL implementation

host-clang

The first step was packaging Clang for the host, as it is necessary to build libclc because this library is written in OpenCL C and some functions are directly implemented in LLVM IR. Clang will transform .cl and .ll source files into LLVM IR bitcode (.bc) by calling llvm-as (the LLVM assembler).

Regarding the Makefile for building host-clang, the path to host’s llvm-config must be specified and some manual configuration is needed because Clang is thought to be built as a tool inside LLVM’s tree (LLVM_SOURCE_TREE/tools/clang) but Buildroot manages packages individually, so Clang’s source code cannot be downloaded inside LLVM’s tree. Having Clang installed on the host is not only useful for building libclc, it also provides an alternative to GCC, which enables the possibility of creating a new toolchain based on it.

Clang for target

It is important to remark that this package will only install libclang.so, not Clang driver. When Clang was built for the host, it generated multiple static libraries (libclangAST.a, libclangFrontend.a, libclangLex.a, etc.) and finally a shared object (libclang.so) containing all of them. However, when building for the target, it produced multiple shared libraries and finally libclang.so. This resulted in the following error when trying to use software that links against libOpenCL, which statically links with libclang (e.g, clinfo):

$ CommandLine Error: Option ’track-memory’ registered more than once! $ LLVM ERROR: inconsistency in registered CommandLine options

To avoid duplicated symbols: CLANG_CONF_OPTS += -DBUILD_SHARED_LIBS=OFF

libclc

This library provides an implementation of the library requirements of the OpenCL C programming language, as specified by the OpenCL 1.1 specification. It is designed to be portable and extensible, as it provides generic implementations of most library requirements, allowing targets to override them at the granularity of individual functions, using LLVM intrinsics for example. It currently supports AMDGCN, R600 and NVPTX targets.

There is a particular problem with libclc: when OpenCL programs call clBuildProgram function in order to compile and link a program (generally an OpenCL kernel) from source during execution, they require clc headers to be located in /usr/include/clc. This is not possible because Buildroot removes /usr/include from the target as the embedded platform is not intended to store development files, mainly because there is no compiler installed on it. But since OpenCL works with libLLVM to do code generation, clc headers must be stored somewhere.

The file that adds the path to libclc headers is invocation.cpp, located at src/gallium/state trackers/clover/llvm, inside Mesa’s source tree:

// Add libclc generic search path

c.getHeaderSearchOpts().AddPath(LIBCLC_INCLUDEDIR,clang::frontend::Angled,false,false);

// Add libclc include

c.getPreprocessorOpts().Includes.push_back("clc/clc.h");It was decided to store these files in /usr/share, which can be specified in libclc's Makefile by setting --includedir=/usr/share. Given that clc headers are being installed to a non-standard location, it is necessary to specify this path in Mesa’s configure.ac. Otherwise, pkg-config outputs the absolute path to these headers located in STAGING_DIR, which causes a runtime error when calling clBuildProgram:

if test "x$have_libclc" = xno; then AC_MSG_ERROR([pkg-config cannot find libclc.pc which is required to build clover. Make sure the directory containing libclc.pc is specified in your PKG_CONFIG_PATH environment variable. By default libclc.pc is installed to /usr/local/share/pkgconfig/]) else LIBCLC_INCLUDEDIR="/usr/share" LIBCLC_LIBEXECDIR=`$PKG_CONFIG --variable=libexecdir libclc` AC_SUBST([LIBCLC_INCLUDEDIR]) AC_SUBST([LIBCLC_LIBEXECDIR]) fi

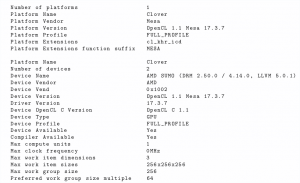

Verifying Clover installation with Clinfo

Clinfo is a simple command-line application that enumerates all possible (known) properties of the OpenCL platform and devices available on the system. It tries to output all possible information, including those provided by platform-specific extensions. The main purposes of Clinfo are:

• Verifying that the OpenCL environment is set up correctly. If clinfo cannot find any platform or devices (or fails to load the OpenCL dispatcher library), chances are high no other OpenCL application will run.

• Verifying that the OpenCL development environment is set up correctly: if clinfo fails to build, chances are high that no other OpenCL application will build.

• Reporting the actual properties of the available devices.

Once installed on the target, clinfo successfully found Clover and the devices available to work with, providing the following output:

Figure 7: clinfo

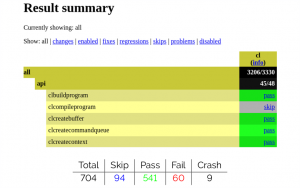

Testing Clover with Piglit

Piglit is a collection of automated tests for OpenGL and OpenCL implementations. The goal of this project is to help improving the quality of open source OpenGL and OpenCL drivers by providing developers with a simple means to perform regression tests. Once Clover was installed on the target system, it was decided to run Piglit in order to verify Mesa’s OpenCL implementation conformance, taking the packaging for Buildroot from Romain Naour’s series (http://lists.busybox.net/pipermail/buildroot/2018-February/213601.html).

To run the OpenCL test suite, the following command must be executed:

piglit run tests/cl results/cl

The results are written in JSON format and can be converted to HTML by running:

piglit summary html --overwrite summary/cl results/cl

Figure 8: Piglit results

Most of the tests that failed can be classified in the following categories:

• Program build with optimization options for OpenCL C 1.0/1.1+

• Global atomic operations (add, and, or, max, etc.) using a return variable

• Floating point multiply-accumulate operations

• Some builtin shuffle operations

• Global memory

• Image read/write 2D

• Tail calls

• Vector load

Some failures are due to missing hardware support for particular operations, so it would be useful to run Piglit with a more recent GPU using RadeonSI Gallium driver in order to compare the results. It would also be interesting to test with both GPUs which packages can benefit from OpenCL support using Clover.

Conclusions and future work

Currently, LLVM 5.0.2, Clang 5.0.2 and LLVM support for Mesa 3D are available in Buildroot 2018.05. The update of these packages to version 6.0.0 has already been done and will be available in the next stable release.

Regarding future work, the most immediate goal is to get OpenCL support for AMD GPUs merged into Buildroot. The next step will be to add more packages that rely on LLVM/Clang and OpenCL. On the other hand, the fact of creating a toolchain based on LLVM/Clang is still being discussed on the mailing and is a topic that requires an agreement from the core developers of the project.

The complete report can be downloaded here: LLVM Clang integration into Buildroot. It shows the development of the project in detail and also contains a section dedicated to the VC4CL package, which enables OpenCL on the Broadcom Videocore IV present in all Raspberry Pi models.