Starting from the 2017.05 release, Buildroot uses GitLab-CI to run automated tests.

Initially only defconfigs (Buildroot configuration examples) were tested by GitLab-CI. Over the years some development scripts, initially intended to be used manually by users, are now tested by GitLab-CI (check-package, test-pkg, check-flake8, get-developers). More importantly, the Buildroot testing infrastructure (run-tests) that provides runtime tests for many packages, is executed by GitLab-CI.

This work enables every Buildroot developer who forked the project to use GitLab shared runners for testing their work before contributing to Buildroot.

This article will provide a summary of the different improvements that have been made since 2017.05 (See the Bootlin article “Recent improvement in Buildroot QA” from 2017) and show how to use it with Buildroot 2021.11.

GitLab-CI at glance

GitLab-CI is the brand name for all the CI/CD features of GitLab.

GitLab-CI is shipped with GitLab server software, without additional installation on server side, but it needs GitLab Runner to be installed on the machine(s) that will run the jobs.

Here is an example of the GitLab-CI infrastructure with one GitLab server and two gitlab runners:

On each runner, the execution environment may be a docker container or directly on the runner itself.

The .gitlab-ci.yml file is the main definition file for CI/CD automation.

It specifies jobs & pipelines.

It is mainly in declarative language.

- It specifies CI/CD objects & their attributes.

- Attributes may be declared in any order

- Some attributes contain an ordered list

- The script attributes of a job object is an ordered list of command lines.

Therefore, it is in imperative language.

A GitLab-CI job is a present or past execution instance of the job script defined in the .gitlab-ci.yml file.

Each job is identified by a unique ID in GitLab.

Once started, a job runs until completion. If the job hangs, GitLab-CI kills it after time-out (3h by default when using Shared Gitlab Runners). When finished, a job has a binary status: passed or failed.

A pipeline is a present or past execution instance of CI/CD. A pipeline might not include all jobs described in the .gitlab-ci.yml file. A pipeline can have a trigger condition (on git push, on tag, manual trigger etc). Each pipeline has a unique ID in GitLab. It is linked to 1 commit of the GitLab repository.

When finished, a pipeline has a binary status: passed or failed. A job ID is always linked to one pipeline ID.

After this short GitLab-CI introduction, we can create our own Buildroot fork in GitLab.com.

Fork the Buildroot projet on GitLab

Go to the Buildroot repository (mirror) hosted on GitLab.com (https://gitlab.com/buildroot.org/buildroot/) and fork the Buildroot project on your GitLab account.

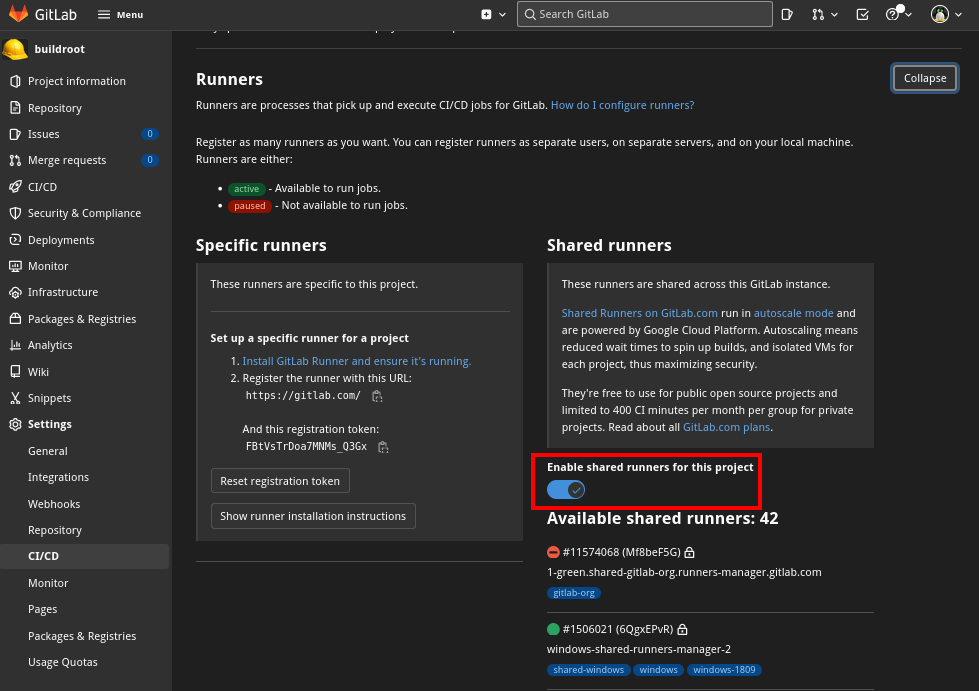

Since we are going to use shared runners provided by GitLab, check if they are enabled in menu Settings -> CI/CD (by default there are disabled)

Buildroot pipeline overview

Even if not very well documented yet in the manual, Buildroot provides a way to trigger a pipeline that can use different jobs depending on the trigger condition.

First, we will list all available jobs and then describe how to trigger a pipeline to build them.

Basic tests

Basic tests regroup all tests that can be executed on every commit (They must be fast to run).

- check-developers (gitlab job since 2017.08):

Check if get-developers script is able to parse the DEVELOPER file. Useful to retrieve developers in charge of a particular package.

- check-flake8 (gitlab job since 2018.05):

Check if all python scripts in the tree follow the PEP 8 -- Style Guide for Python Code.

- check-package (gitlab job since 2018.05):

Check if all packages in Buildroot follow the recommended coding style. It parse all *.mk, Config.*, *.hash, *.patch files.

For Buildroot 2022.02 (or later), check-package will also check all SysV init scripts using the shellcheck tool.

When pushing a branch or new commits on a GitLab Buildroot repository, a pipeline is triggered to pass these checks (except when defconfig or runtime tests are explicitly requested, see following chapters).

Even if these scripts are intended to check all upstream packages, it’s highly recommended to use them on custom packages (from a BR2_EXTERNAL) since they can spot a lot of mistakes (typos).

Example of basic tests:

Using a local Buildroot git repository where a remote to your GitLab Buildroot fork named “gitlab” exists. After pushing your branch, basic tests will be triggered.

$ git checkout origin/master -b my-project

$ git push gitlab my-project

On your GitLab Buildroot fork, go to CI/CD -> pipelines to see the newly created pipeline. Click on it to see all jobs it contains:

You will notice that this first pipeline is divided into two stages called “generate-gitlab-ci” and “build” followed by a child-pipeline (downstream).

The first stage is used to generate a gitlab-ci yaml file from a template. The list of jobs depends on the number of defconfig or tests. The real (interesting) testing is done in the child pipeline (click on it)

The child pipeline contains only one stage called “test” with 3 jobs:

If a test fails, as explained above, the corresponding job and the pipeline will also fail.

An example of a failing test due to a patch with patch numbers (coding style):

Basic testing can be extended to check if all defconfigs files are correctly imported into Buildroot (Check that no option was lost in the process).

$ git checkout origin/master -b my-project-basics

$ git push gitlab my-project-basics

Defconfig tests

Defconfig tests regroup all Buildroot defconfig build testing. Each job builds only one defconfig using the default Buildroot internal toolchain. It allows to test if the defconfig provided for a specific board builds correctly. It takes a lot of time to build each defconfig mainly due to the internal toolchain and kernel builds.

Among all defconfigs, defconfigs for qemu are the only ones that are runtime tested with the host-qemu provided by Buildroot (boot tested since 2020.05). The prefix “qemu” is detected by a script retrieving the qemu command line from readme.txt files (board/qemu/<arch>/readme.txt). The script monitor the system boot by waiting for specifying strings like "buildroot login:" or the shell prompt “# “. When needed, the script can send strings like the user name “root” to login.

Here is the current list of qemu defconfigs that cover almost all architectures supported by Buildroot:

aarch64, armv5, armv7a, m68k, microblaze, mips32, mips64, nios2, or1k (openrisc), ppc (powerpc), ppc64, riscv32, riscv64, s390x, sh4, sparc, sparc64, x86, x86_64, xtensa.

Triggering a new pipeline to test all defconfig is easy, it’s based on the name of the branch pushed to the git repository hosted by GitLab.

Create a new branch ending with “-defconfigs”, and push it to trig the job :

$ git checkout origin/master -b my-testing-defconfigs

$ git push gitlab my-testing-defconfigs

But generally we are interested in only one defconfig at a time.

Create a branch with the same name as the defconfig file to be tested (here for the raspberry-pi 4 64bits):

$ git checkout origin/master -b raspberrypi4_64_defconfig

$ git push gitlab raspberrypi4_64_defconfig

Since recently [2022.02] we can test multiple defconfigs at the same time but filtering with a pattern:

Create a branch to test only Qemu defconfig:

$ git checkout origin/master -b my-testing-defconfigs-qemu

$ git push gitlab my-testing-defconfigs-qemu

Regarding the time spent to build one defconfig, here is an example of the qemu_x86_64_defconfig build time (~47 min to build)

(In red, all packages built for the toolchain)

With currently 269 defconfig files built in 50 minutes each, testing all of them requires ~230 hours. Still, GitLab Shared runners are not a very fast build machine but building all defconfigs in parallel allows to pass all tests within 3 hours (job timeout limit).

Runtime tests

The Buildroot runtime testing infrastructure introduced with Buildroot 2017.05 and used with GitLab-CI since 2017.08 allows testing several well known use cases. If possible, each test case is runtime tested in QEMU.

It covers the main parts of Buildroot :

- Buildroot core testing (BR2_EXTERNAL, rootfs overlay, hardening etc…)

- Download infrastructure (git clone, github and gitlab helper)

- Filesystem images (ext, iso9660, jffs2, oci, squashfs, ubifs etc…)

- Init boot methods (busybox init, systemd, systemd with SELinux)

- External toolchain infrastructure (sysroot import, Glibc, musl, uClibc-ng)

- Booloader build testing that are not covered by autobuilders

- Many package testing. The aim of each package check is to verify if it's correctly built and deployed to the rootfs. If possible a runtime check is performed to verify that the binary runs correctly. But this testing is not intended to run the package testsuite, see the discussion about the systemd testsuite proposal.

At the time of writing this article, there are 431 tests in the Buildroot testsuite. Each test is executed by one GitLab-ci job.

A custom/personal project based on Buildroot may use the runtime test infrastructure to add additional tests using a custom package from a BR2_EXTERNAL. See the Python Nose2 documentation for details on how to write a test.

Usually it is a good idea to provide a test case when adding a new package. But before that, we have to make sure that any new package builds fine while cross-compiling for several architectures supported by Buildroot and for several toolchain versions and libc implementations. This is where the script “testpkg” is helpful.

Testpkg tests

The test-pkg script, which test a specific package with several (currently 45) toolchain configurations, has been available in Buildroot since 2017.02. It uses the same prebuilt toolchain set as the one used by autobuilders. The inital problem with this script is that it test sequentially each toolchain configuration, so il will take a long time to complete.

Since Buildroot 2021.11, it’s possible to dispatch each toolchain testing into one GitLab job to parallelize tests.

The main problem is that we need to provide a defconfig fragment to testpkg script to enable only the package we want to test in the tested Buildroot configuration.

On the screenshot below, you can notice a “---” line below the commit log. All lines below this line are removed from the commit when the patch is imported using “git am” by the maintainer. In GitLab-CI we can retrieve the commit log from the variable CI_COMMIT_DESCRIPTION including all the text below the “---” line (see predefined variable list).

The "---" keyword allows to add (hide) the defconfig fragment, corresponding to the package to be tested, into the last commit of the series adding a new package.

---

test-pkg config:

SOME_OPTION=y

# OTHER_OPTION is not set

SOME_VARIABLE="some value"

Here is an example with the busybox version bump. Last commit contains keyworks to enable test-pkg for busybox. Testing with 45 toolchains take now only 5 minutes.

During the step “generate-gitlab-ci” we can notice the output of testpkg script listing all toolchain evaluated. No Job is created if the package tested is not available for a given toolchain.

Conclusion

Testing with GitLab-CI eases "smoke testing" a new package with a wide range of toolchains by offloading (and parallelizing) all build jobs to GitLab runners. This is really useful to detect any issue before contributing to the project. Having a link to the GitLab-CI testing is a good sign that the new package has been tested and safe to be merged. Be carefull, it's just build testing, there is no guarantee that the binary is really working.

In order to cope with all regular packages update in Buildroot, adding a runtime test allows to check if a binary is still working even if its dependencies changed or the package providing this binary has been updated. The test done by the runtime testing infrastructure check only if a package is correctly packaged into Buildroot, it doesn't replace the testing done by the upstream package maintainers.

The Buildroot project itself uses a scheduled pipeline to test all the testsuite (run-tests) on Monday and all defconfigs on Friday. The result of this testing is reported by the “Daily results” the next day on the Buildroot mailing list.

Feel free to contribute to the Buildroot project by investigating these issues and send your analysis or even better a patch.